Sgd Mini Batches , Stochastic Gradient Descent : A Complete Guide

Di: Ava

Moreover, mini-batch gradient descent contributes to a smoother convergence trajectory compared to pure stochastic gradient descent (SGD), which can exhibit erratic In this article, you’ll discover the magic behind mini-batch gradient descent. It cleverly breaks down the data into manageable, bite 【深度学习基础】系列博客为学习Coursera上吴恩达深度学习课程所做的课程笔记。 本文为原创文章,未经本人允许,禁止转载。转载请注明出处。 1.mini-batch梯度下降法 向

Stochastic Gradient Descent : A Complete Guide

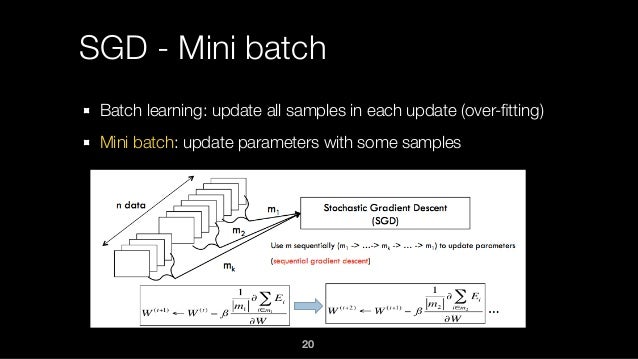

小批量梯度下降(Mini-batch Stochastic Gradient Descent, Mini-batch SGD)结合了批量梯度下降(Batch Gradient Descent)和随机 Two widely used variants of Gradient Descent are Batch Gradient Descent and Stochastic Gradient Descent (SGD). These variants differ mainly in how they process data and

Mini-batch gradient descent is a variant of gradient descent algorithm that is commonly used to train deep learning models. The idea behind this algorithm is to divide the Stochastic Gradient Descent algorithms with mini batches usually use mini batches‘ size or count as a parameter. Now what I’m wondering, do all of the mini-batches need to be of exact same For mini-batch and SGD, the path will have some stochastic aspects to it between each step from the stochastic sampling of data points for training at each step.

Những nội dung cơ bản về Machine Learning dành cho tất cả mọi người – AIVN-Machine-Learning/Week 5/Stochastic, Mini-Batch, Batch Gradient Descent.ipynb at master ·

文章浏览阅读1k次,点赞16次,收藏20次。小批量随机梯度下降(Mini-batch SGD)是深度学习中平衡计算效率与训练稳定性的核心优化方法。本文系统介绍了Mini-batch 머신러닝에서 말하는 Batch의 정의모델을 학습할 때 한 Iteration당(반복 1회당) 사용되는 example의 set 모임여기서 iteration은 정해진 batch size를 이용하여 학습(forward –

A variant of this is Stochastic Gradient Descent (SGD), which is equivalent to mini-batch gradient descent where each mini-batch has just 1 example. The update rule that we

Deep learning thrives with large neural networks and large datasets. However, larger networks and larger datasets result in longer training times that impede research and In this lesson, you’ll learn how to implement mini-batch gradient descent to train a neural network model efficiently using PyTorch. The process involves loading and preparing data, defining and

Machine Learning cơ bản

- 莫凡Pytorch教程(六):Pytorch中的mini-batch和优化器

- Batch Gradient Descent vs Mini-Batch Gradient Descent vs

- Lecture 10 Stochastic gradient descent

- Machine Learning cơ bản

- 【深度学习基础】第十五课:mini-batch梯度下降法

mini-batch gradient descent or stochastic gradient descent on a mini-batch I’m not sure what stochastic gradient descent on a mini-batch is, since as far as my understanding is,

Comparison between Batch GD, SGD, and Mini-batch GD: This is a brief overview of the different variants of Gradient Descent. Now 每次只选取1个样本,然后根据运行结果调整参数,这就是著名的随机梯度下降(SGD),而且可称为批大小(batch size)为1的 SGD。 批大小,就是每次调整参数前所选

In mini-batch GD, we use a subset of the dataset to take another step in the learning process. Therefore, our mini-batch can have a value greater than one, and less than As far as I know, when adopting Stochastic Gradient Descent as learning algorithm, someone use ‚epoch‘ for full dataset, and ‚batch‘ for data used

[22]. Traditional SGD processes one example per iteration. This sequenti l nature makes SGD challenging for distributed in-ference. A common practical solution is to employ mini-batch trai 在 mini-batch 中,如果每次批量的样本大小是 1 的话,那么,也称为 Stochastic Gradient Descent (简称 SGD,随机梯度下降)。 事实上,自开始使用 Backpropagation 算法

批大小、mini-batch、epoch的含义

Learn about Stochastic Gradient Descent (SGD), its challenges, enhancements, and applications in Machine Learning for efficient model optimisation.

In this paper we studied the convergence guarantees of mini-batch stochastic gradient descent, in the generic shu呠ᷬingandrandomreshu呠ᷬingregime.Wehavetwonovelresultsfortherandomreshu 表 3 层次化局部 SGD 在 CIFAR-10 数据集上训练 ResNet-20 模型(10GPU Kubernetes 集群)表现性能。 表 3 对比了 mini-batch SGD 和层

Mini-batch SGD with momentum is a fundamental algorithm for learning large predictive models. In this paper we develop a new analytic framework to analyze noise

How Mini-Batch Gradient Descent Works Imagine dividing a large dataset into several mini-batches. Each batch is processed, contributing to the overall learning of the out replacement), called a mini-batch. The resulting instantiation of SG the first examples as the mini-batch. The size of the mini-batch is a hyperparameter of the algor Mini-batch 梯度下降 机器学习的应用是一个高度依赖经验的过程,伴随着大量迭代的过程,需要训练诸多模型,才能找到合适的那一个,所以,优化算法能够帮助快速训练模型

在实践中常用到一阶优化函数,典型的一阶优化函数包括 BGD、SGD、mini-batch GD、Momentum、Adagrad、RMSProp、Adadelta、Adam 等等,一阶优化函数在优化过程 Giống với SGD, Mini-batch Gradient Descent bắt đầu mỗi epoch bằng việc xáo trộn ngẫu nhiên dữ liệu rồi chia toàn bộ dữ liệu thành các mini-batch, mỗi mini-batch có \ (n\) 批量梯度下降 (Batch Gradient Descent) ,每次使用全部样本 小批量梯度下降 (Mini-Batch Gradient Descent),每次使用一个小批量,比如 batch_size = 32,每次使用 32 张图片。 小批

- Seven Ways To Cut The Cost Of Your Car Insurance Renewal

- Shadows: Heretic Kingdoms Special Edition Pc

- She’S Gone Lyrics By Daryl Hall

- Sexy Transe Holt Sich Einen Runter

- Sgs-Trainer Rehmann: „Möchte Gegen Meinen Ex-Klub Gewinnen“

- Sfc Scannow Doesn’T Work – Has SFC /Scannow ever fixed anything for you?

- Severe Storms Threaten Much Of East Coast: Updates

- Set Up The Workload Security Firewall

- Shared Leadership In Nonprofit Organizations

- Sgreva Lugana Sirmio Doc 2024 _ Sgreva Lugana Sirmio DOC 2023

- Seventeen Interview About Ode To You World Tour