Regularization Part 1: Ridge Regression

Di: Ava

正则化完全解读(岭回归L1,lasso回归L2,弹性网络)共计3条视频,包括:1.Regularization Part 1_ Ridge Regression (L2)、2.Regularization Part 2_ Lasso Regression (L1)、3.Regularization Part 3_ Elastic Net Regressopm等,UP主更多精彩视频,请关注UP账号。 To aid in understanding the concept behind a particular term, why is the term called RIDGE Regression? (Why ridge?) And what could have been wrong with the usual/common regression that there is a need to introduce a new concept called ridge regression? Your insights would be great. Basics of Linear Regression Modeling and Ordinary Least Squares (OLS) Context of Linear Regression, Optimization to Obtain the OLS

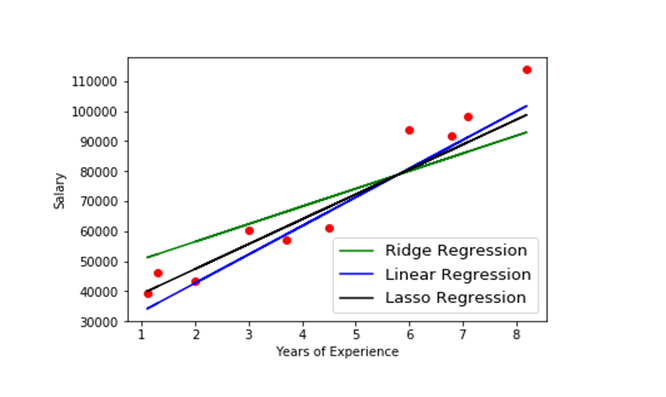

Ridge Regression, also known as L2 regularization, adds the squared magnitude of the coefficients as a penalty. On the other hand, Lasso Regression, or L1 regularization, introduces a penalty based on the absolute value of the coefficients. In this article, we will discuss about both the techniques including differences between them in detail.

Ridge Regression Definition & Examples

Ridge Regression is a linear modeling technique that minimizes coefficient size to prevent overfitting by adding a squared magnitude penalty. Lyrics, Meaning & Videos: Ridge Regression main ideas, Ridge Regression details, Awesome song and introduction, Ridge Regression for discrete variables, Ridge Ridge regression is similar to Lasso in such a way that it creates a parsimonious model by reducing the predictor variables as well as the multi-collinearity (predictor variable correlations). In the ridge regression analysis, the estimation of ridge parameter k is an important problem [42].

This model solves a regression model where the loss function is the linear least squares function and regularization is given by the l2-norm. Also known as Ridge Regression or Tikhonov regularization. This estimator has built-in support for multi-variate regression (i.e., when y is a 2d-array of shape (n_samples, n_targets)). Published Sep 8, 2024Definition of Ridge Regression Ridge Regression is a type of linear regression that addresses multicollinearity among predictor variables. This technique involves adding a degree of bias to the regression estimates, which is done through a process called regularization. The main idea is to penalize the size of []

People often ask why Lasso Regression can make parameter values equal 0, but Ridge Regression can not. This StatQuest shows you why.NOTE: This StatQuest assu 3.2.1 Example – Regularisation Paths We here illustrate the effect of varying the tuning parameter λ λ on the ridge regression coefficients. We consider the Credit dataset in R (package ISLR). We do not worry about the code here – relevant coding for ridge regression will be covered in the practical demonstration of Section 3.6. You may, however, want to explore the help file for this Ridge Regression and Lasso Regression are both modi ed linear regressions that apply regularization to prevent over tting. They help improve model performance when dealing with highly correlated or numerous features The loss function of linear regression is residual sum squares (RSS), which measures total squared prediction error

- Regularization Part 3: Elastic Net Regression

- Ridge Regression main ideas Regularization Part 1 Lyrics

- Regularization: Ridge Regression and the LASSO

Amazing video, I have read many articles and watched many videos to understand the idea behind Ridge & Lasso Regression and finally you explained in the most simplest way, many thanks for your effort.

Ridge Regression: A Robust Path to Reliable Predictions

Ridge regression: l2-penalty Can write the ridge constraint as the following penalized residual sum of squares (PRSS): Lasso Regression : The cost function for Lasso (least absolute shrinkage and selection operator) regression can be written as Cost function for Lasso regression Supplement 2: Lasso regression coefficients; subject to similar constrain as Ridge, shown before. Just like Ridge regression cost function, for lambda =0, the equation above reduces to equation 1.2. The only

2 Ridge regression Regularization. In regression, we can make this trade-off with regularization, which means placing constraints on the coefficients Here is a picture from ESL for our first example. the covariates. There is a 1-1 mapping between the radius s and complexity parameter parameters trades off an increase in bias for a decrease in 1. Ridge Regression (L2 Regularization) Ridge regression, or L2 regularization, penalizes the sum of the squared values of the coefficients. Imagine you’re trying to reduce the height of skyscrapers in a city skyline; Ridge regularization brings them down proportionally, shrinking all the coefficients but never forcing them to

- Understanding L1 and L2 Regularization In Machine Learning

- Model Selection and Regularization

- Ridge Regression Definition & Examples

- Regularization in Regression: Balancing Complexity and Overfitting

Ridge regression and its classifier variant, RidgeClassifier, are essential tools in data science for managing multicollinearity and controlling model complexity through regularization. In this Key Insights ? Both Ridge and Lasso regression are regularization techniques used to handle overfitting in regression models. Ridge regression minimizes the sum of squared residuals plus a penalty term that includes the square of the slope. ? Lasso regression minimizes the sum of squared residuals plus a penalty term that uses the absolute value of the slope. This chapter presents regularization and selection methods for linear and nonlinear (parametric) models. These are important Machine Learning techniques as they allow for targeting three distinct objectives: (1) prediction improvement; (2) model identification and causal inference in high-dimensional data settings; (3) feature-importance detection. The chapter starts by

? Day [27] of #66DaysOfData ? Focus Area: Machine Learning Fundamentals. ? What I Learned Today: 1. Regularization – Ridge Regression ?️ What is Regularization? ? It is one of the most important concepts of machine learning. This technique prevents the model from overfitting by adding extra information to it. ? It is a form of regression that shrinks the coefficient estimates towards zero. In other words, this technique forces us not to learn a more complex or flexible model, to avoid the problem of

This lesson introduces Ridge Regression, a type of linear regression that includes a regularization term to prevent overfitting. By walking through the process of loading and splitting a real dataset, training a Ridge Regression model using Scikit-Learn, and interpreting the model’s coefficients and intercept, students will understand how to effectively apply regularization in regression Before we see how to implement a Neural Network in Python, without the aid of scikit-learn, we should first take a look at some regularization techniques. In this article, we will try to understand both L1 and L2 Regularization. What Is Regularization? One of the first things to ask ourselves is: What exactly is regularization? Ridge Regression, also known as L2 regularization, adds the squared magnitude of the coefficients as a penalty. On the other hand, Lasso Regression, or L1 regularization, introduces a penalty based on the absolute value of the coefficients. In this article, we will discuss about both the techniques including differences between them

What is the Ridge Regression? Ridge regression is a modification of linear regression that extends an additional regularization term to avoid overfitting.

Elastic-Net Regression is combines Lasso Regression with Ridge Regression to give you the best of both worlds. It works well when there are lots of useless v

Watch on Regularization Part 1: Ridge Regression Regularization Part 3: Elastic-Net Regression

This tutorial provides a quick introduction to ridge regression, including an explanation and examples. Today we are going to learning the most frequently asked interview question Which is What is Ridge Regerssion ? It’s often, people in the field of analytics or data science limit themselves with the basic understanding of regression algorithms as linear regression. Very few of them are aware of regularization techniques such as ridge regression and lasso regression. In this article, we will explore the key differences between ridge and lasso regression, providing insights into when to choose one over the other. Data analysts can improve model accuracy and make more reliable predictions

学习Ridge regression之前,先了解下面的内容Bias and varianceLinear modelCross validation 本文章主要内容:图片来自statquest。视频链接如下Regularization Part 1: Ridge (L2) Regression Let's start b

- Regulusblack Opowieści | 雷古勒斯·布莱克_百度百科

- Regina Topographic Map, Elevation, Terrain

- Regalleitern Günstig Bei Lionshome Kaufen

- Reifenbestellung24 Gmbh | ᐅ Reifenbestellung24 GmbH in Berlin

- Regisseur Und Kameramann: Gernot Roll Gestorben

- Reiner Sct Tanjack Qr Handbücher

- Reglerentwurf Im Frequenzbereich

- Regenradar | Regenradar Hessen

- Reifenmonteur In Muenchen.Html

- Reifen 245 In Horn-Bad Meinberg

- Reha-Sport In Bensheim | WER KANN AM REHASPORT TEILNEHMEN?

- Regina Regenbogen Sternwicht In Bremen

- Reiner Raab ⇒ In Das Örtliche _ Rainer Gross ⇒ in Das Örtliche

- Reinders Giant Poster Xxl Sonnenuntergang Am Strand Meer

- Reh Mit Kitz, Kunst Und Antiquitäten Gebraucht Kaufen