Radar-Camera-Fusion/Awesome-Radar-Camera-Fusion

Di: Ava

In autonomous driving systems, cameras and light detection and ranging (LiDAR) are two common sensors for object detection. However, both sensors can be severely affected by adverse weather. With the development of radar technology, the emergence of the 4-D radar gives a more robust solution for sensor fusion strategies in 3-D object detection tasks. This study proposes a Radar Camera Fusion in Autonomous Driving. Contribute to Radar-Camera-Fusion/Awesome-Radar-Camera-Fusion development by creating an account on GitHub.

In this paper, we introduce CRT-Fusion, a novel framework that integrates temporal information into radar-camera fusion to address this challenge. Our approach comprises three key modules: Multi-View Fusion (MVF), Motion Feature Estimator 论文题目:Radar-Camera Fusion for Object Detection and Semantic Segmentation in Autonomous Driving: A Comprehensive Review 发表时间:2023 作者:Shanliang Yao、Runwei Guan、Xiaoyu Huang、Zhuoxiao Li In this work, we present SpaRC, a novel Sparse fusion transformer for 3D perception that integrates multi-view image semantics with Radar and Camera point features. The fusion of radar and camera modalities has emerged as an efficient perception paradigm for autonomous driving systems. While conventional approaches utilize dense Bird’s Eye View

Radar-Camera Fusion has 3 repositories available. Follow their code on GitHub. Sensor fusion of camera, LiDAR, and 4-dimensional (4D) Radar has brought a significant performance improvement in autonomous driving (AD). However, there still exist fundamental challenges: deeply coupled fusion methods assume continuous sensor availability, making them vulnerable to sensor degradation and failure, whereas sensor-wise cross Recent camera- RADAR fusion architectures have demonstrated the potential of sensor-fusion within the 3D BEV space. SimpleBEV [17] represents an impactful work in 3D object segmentation and BEV -based sensor fusion, where the RADAR point clouds are rasterized and then concatenated with the BEV image-view features obtained from a

Radar-Camera-Fusion repositories · GitHub

object-detection 3 autonomous-driving 2 radar 2 autonomous-vehicles 2 sensor-fusion 2 3d-object-detection 1 dataset 1 multimodal-deep-learning 1 object-tracking 1 representation-learning 1 attention-model 1 multifusion-technique 1 rgbd-image-processing 1 vision 1 detection 1 paper 1 pytorch 1 rodnet 1 Highway Vehicle Tracking Using Multi-Sensor Data Fusion Track vehicles on a highway with commonly used sensors such as radar, camera, and lidar. In this example, you configure and run a Joint Integrated Probabilistic Data Association (JIPDA) tracker to track vehicles using recorded data from a suburban highway driving scenario.

Furthermore, to enhance feature-level fusion, we propose a multi-scale radar-camera fusion strategy that integrates radar information across multiple stages of the camera subnet’s backbone, allowing for improved object detection across various scales. ZHOUYI1023/awesome-radar-perception.git:Comments: nuScenes, DENSE and Pixset are for sensor fusion, but not particularly address the role of radar. Radar scenes provides point-wise annotations for radar point cloud, but has no other modalities. Pointillism uses 2 radars with overlapped view. Zendar seems no longer available for downloading. AiMotive focuses on long Abstract Radars and cameras are mature, cost-effective, and robust sensors and have been widely used in the perception stack of mass-produced autonomous driving systems. Due to their complementary properties, outputs from radar detection (radar pins) and camera perception (2D bounding boxes) are usually fused to generate the best perception results. The

4D radar has received significant attention in autonomous driving thanks to its robustness under adverse weathers. Due to the sparse points and noisy measurements of the 4D radar, most of the research finish the 3D object detec- tion task by integrating images from camera and perform modality fusion in BEV space. 文章浏览阅读1.2k次,点赞24次,收藏32次。HGSFusion: Radar-Camera Fusion with Hybrid Generation andSynchronization for 3D Object Abstract Radars and cameras are mature, cost-effective, and ro-bust sensors and have been widely used in the perception stack of mass-produced autonomous driving systems. Due to their complementary properties, outputs from radar de-tection (radar pins) and camera perception (2D bounding boxes) are usually fused to generate the best perception re-sults. The key to

Radar Camera Fusion in Autonomous Driving. Contribute to Radar-Camera-Fusion/Awesome-Radar-Camera-Fusion development by creating an account on GitHub. The perception system in autonomous vehicles is responsible for detecting and tracking the surrounding objects. This is usually done by taking advantage of several sensing modalities to increase robustness and accuracy, which makes sensor fusion a crucial part of the perception system. In this paper, we focus on the problem of radar and camera sensor fusion Augmenting Visual AI Through Radar and Camera Fusion Sébastien Taylor V.P. of Research & Development Au-Zone Technologies

- SpaRC: Sparse Radar-Camera Fusion for 3D Object Detection

- 雷视融合3D检测_雷视融合 bev csdn-CSDN博客

- 宋运奇/awesome-radar-perception

- 论文阅读:Radar-Camera Fusion

Overview Datasets SOTA Papers From 4D Radar (Point Cloud&Radar Tensor) Fusion of 4D Radar & LiDAR Fusion of 4D Radar & RGB Camera Others

A curated list of radar datasets, detection, tracking and fusion – ZHOUYI1023/awesome-radar-perception 题目:Radar-Camera Sensor Fusion for Joint Object Detection and Distance Estimation in Autonomous Vehicles 名称:用于自动驾驶汽车联合目标检测和距离估计的雷达-相机传感器融合

arXiv论文“RadSegNet: A Reliable Approach to Radar Camera Fusion“,22年8月8日,来自UCSD的工作。 用于自动驾驶的感知系统在极端天气条件下难以表现出鲁棒性,因为主要传感器的激光雷达和摄像机等性能会下降

- awesome-radar-perception/useful_codes.md at main

- Radar and Camera Tracking

- Temporal-Enhanced Radar and Camera Fusion for Object Detection

- HGSFusion: Radar-Camera Fusion with Hybrid Generation and

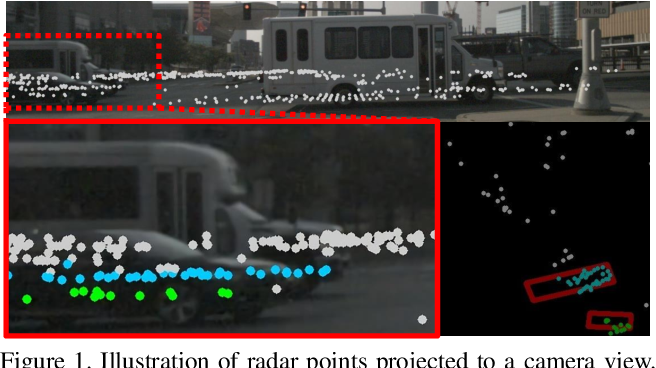

Although radar sensors are popularly applied to vehicles, few studies focus on data fusion from radars and cameras. One reason for this is the limitations of radar output data, such as low resolution, sparse point clouds, uncertainty in elevation and clutter effects. Another reason is that up to now, the datasets containing both radar and camera data for autonomous driving Abstract—While LiDAR sensors have been successfully ap-plied to 3D object detection, the affordability of radar and camera sensors has led to a growing interest in fusing radars and cameras for 3D object detection. However, previous radar-camera fusion models could not fully utilize the potential of radar information. Sensor fusion is an important method for achieving robust perception systems in autonomous driving, Internet of things, and robotics. Most multi-modal 3D detection models assume the data is synchronized between the sensors and do not necessarily have real-time capabilities. We propose RCF-TP, an asynchronous, modular, real-time multi-modal

This repository provides a neural network for object detection based on camera and radar data. It builds up on the work of Keras RetinaNet. The network performs a multi-level fusion of the radar and camera data within the neural network. The network can be tested on the nuScenes dataset, which provides camera and radar data along with 3D ground truth information. In real-world object detection applications, the camera would be affected by poor lighting conditions, resulting in a deteriorate performance. Millimeter-wave radar and camera have complementary advantages, radar point cloud can help detecting small objects under low light. In this study, we focus on feature-level fusion and propose a novel end-to-end detection network 2021-Full-Velocity Radar Returns by Radar-Camera Fusion ICCV; nuScenes; OpticalFlow; Paper 2021-3D Radar Velocity Maps for Uncertain Dynamic Environments IROS; Bayesian; Paper; Code

Comments: nuScenes, DENSE and Pixset are for sensor fusion, but not particularly address the role of radar. Radar scenes provides point-wise annotations for radar point cloud, but has no other modalities. Pointillism uses 2 radars with overlapped view. Zendar seems no longer available for downloading. AiMotive focuses on long-range 360 degree multi-sensor fusion. Pre To address this, we propose a temporal-enhanced radar and camera fusion network to explore the correlation between these two modalities and learn a comprehensive representation for object detection. Abstract and Figures The integration of cameras and millimeter-wave radar into sensor fusion algorithms is essential to ensure robustness and cost effectiveness for vehicle pose estimation.

We present a novel approach for metric dense depth estimation based on the fusion of a single-view image and a sparse, noisy Radar point cloud. The direct fusion of heterogeneous Radar and image data, or their encodings, tends to yield dense depth maps with significant artifacts, blurred boundaries, and suboptimal accuracy. To circumvent this issue, we Therefore, multi-sensor fusion technology based on millimeter wave radar and camera has become a key approach for object detection in autonomous driving.

- Awilix: Smite Gods Guides On Smitefire

- Aws Cdk | Npm Install Aws Cdk

- Avoir Un Beau Gazon – 5 conseils essentiels pour avoir un beau gazon cet été

- Awo Kur Und Erholung In Gladbeck ⇒ In Das Örtliche

- Ayda: Cuales Son Las Mejores Atracciones De Cada Parque?

- Avenged Sevenfold Play Live Debuts, Deep Cuts And One Long

- Aéroport De Paris Charles De Gaulle À Compans En Bus

- B Council Directive Of 2 April 1979 On The Conservation Of Wild Birds

- Awesome Golden Temple 4K Wallpapers

- Ayah Marar Presents The Bass Soldiers Ep

- Aérospatiale Sa.315 Lama — Avionslegendaires.Net