Problems And Solutions In Optimization

Di: Ava

The beauty of exact optimization methods comes from a guaranteed identification of the best solution (technically, an optimal solution). But depending on the problem sets, the optimal solution could be costly, time- consuming, or impractical. If the decision variable space is too big, exact method optimization may be intractable.

Convex Optimization Solutions Manual

Graphical Method The graphical method is used to optimize the two-variable linear programming. If the problem has two decision variables, a graphical method is the best method to find the optimal solution. In this method, the set of inequalities are subjected to constraints. Then the inequalities are plotted in the XY plane. Once, all the inequalities are plotted in the XY graph,

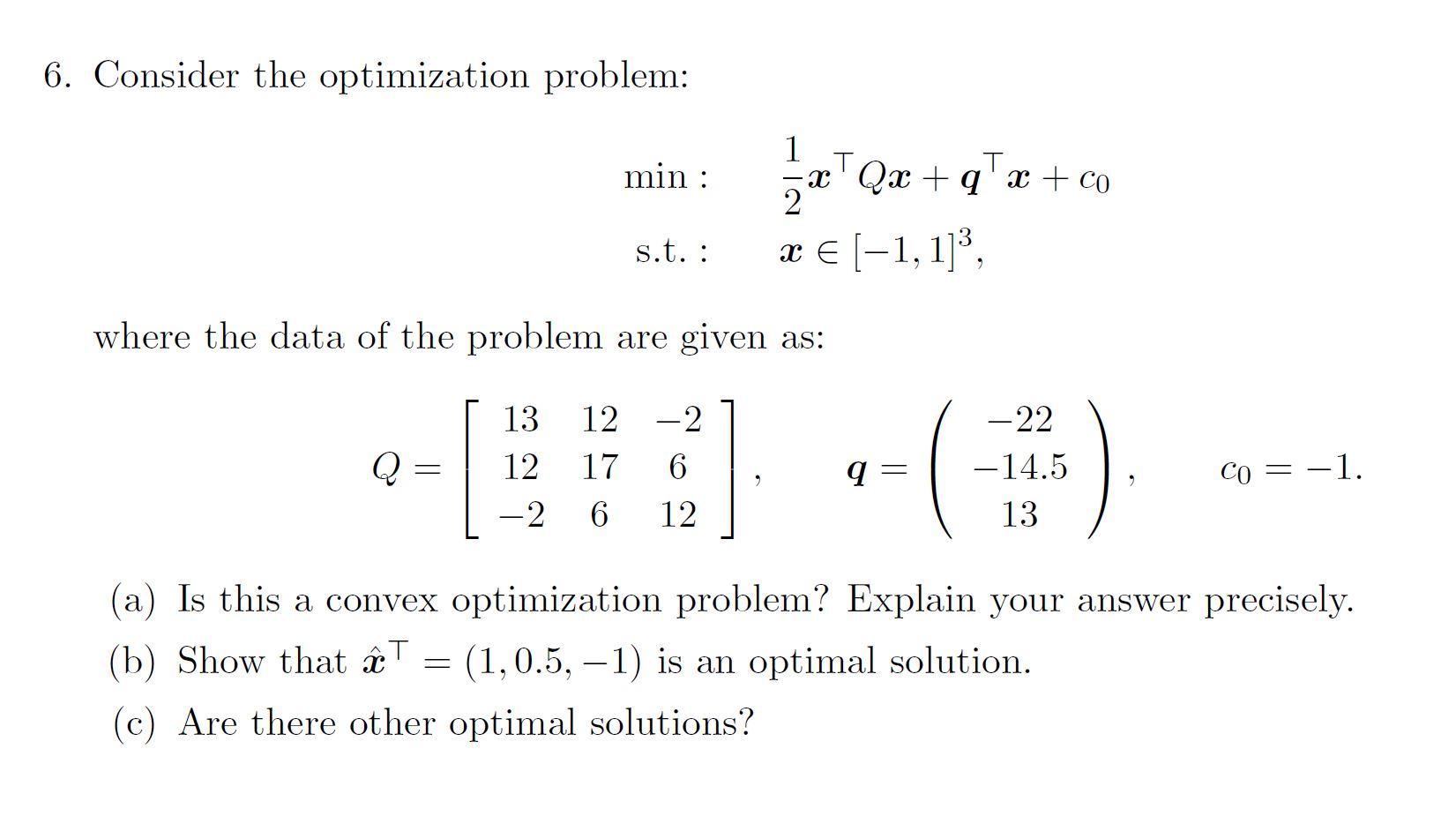

What is an optimization problem? Optimization problems are often subdivided into classes: Linear vs. Nonlinear Convex vs. Nonconvex Unconstrained vs. Constrained Smooth vs. Nonsmooth With derivatives vs. Derivativefree Continuous vs. Discrete Algebraic vs. ODE/PDE gh” solution will suffice. For some problems it is possible to devise efficient heuristic algorithms that come close to the optimum with acceptable consistency. In the case of the traveling salesman problem, for example, one obvious heuristic is to first pick a starting city, and then keep traveling to the closest unvisited city; when finally

This work presents a study about a special class of infeasible solutions called here as pseudo-feasible solutions in bilevel optimization. This work is focused on determining how such solutions can affect the performance of an evolutionary algorithm. Most business decisions = optimization: varying some decision parameters to maximize profit (e.g. investment portfolios, supply chains, etc.) general optimization problem Solution. We prove the rst part. The intersection of two convex sets is convex. There-fore if S is a convex set, the intersection of S with a line is convex. Conversely, suppose the intersection of S with any line is convex. Take any two distinct points x1 and x2 2 S. The intersection of S with the line through x1 and x2 is convex. Therefore convex combinations of x1 and x2 belong to the

Optimization problems can be divided into two categories, depending on whether the variables are continuous or discrete: An optimization problem with discrete variables is known as a discrete optimization, in which an object such as an integer, permutation or graph must be found from a countable set. A problem with continuous variables is known as a continuous optimization, in

IV. Solving Network Problems

DIFFERENTIATION OPTIMIZATION PROBLEMS figure 2 An open box is to be made out of a rectangular piece of card measuring 64 cm by 24 cm. Figure 1 shows how a square of side length x cm is to be cut out of each corner so that the box can be made by folding, as shown in figure 2. Show that the volume of the box, V cm , is given by 3 This book offers practical guidance on solving real-world optimization problems and covers all the main aspects of the optimization process.

- Navigating Challenges in Nonlinear Optimization

- Convex Optimization Solutions Manual

- Introduction to Numerical Optimization Problem Sets with Solutions

- Section 4.6/4.7: Optimization Problems

Calculus Optimization Problems/Related Rates Problems Solutions 1) A farmer has 400 yards of fencing and wishes to fence three sides of a rectangular field (the fourth side is along an existing stone wall, and needs no additional fencing). Find the dimensions of the rectangular field of largest area that can be fenced. A Brief Overview of Optimization Problems Steven G. Johnson MIT course 18.335, Spring 2019

The Evolutionary method is tailored to handle these complex optimization problems. By selecting the appropriate solving method based on the nature of your problem, you can leverage the power of Excel Solver to find optimal solutions efficiently.

Successful solution of problems in this vein depends usually on a mar-riage between techniques in ordinary “continuous” optimization and special ways of handling certain kinds of combinatorial structure. The following problems are maximum/minimum optimization problems. They illustrate one of the most important applications of the first derivative. Many students find these problems intimidating because they are „word“ problems, and because there does not appear to be a pattern to these problems. However, if you are patient you can minimize your anxiety and maximize your Unlock the POWER of CALCULUS in Maximizing Efficiency through Optimization Problems ? . Discover advanced strategies and techniques. Aprende más ahora.

In summary, the benefits and advantages of using genetic algorithms for optimization and problem solving are their ability to efficiently search large solution spaces, adaptability to various problems, capability to handle complex and non-linear problems, and computational efficiency. Example \ (\PageIndex {1}\): Optimization: perimeter and area A man has 100 feet of fencing, a large yard, and a small dog. He wants to create a rectangular

Optimization Algorithms in Machine Learning

An optimization problem refers to a task of finding the best solution from a set of feasible solutions, which satisfy all the constraints defined by the problem. It involves identifying the optimal values for decision variables in order to either minimize or maximize a fitness function, while also taking into account any specified constraints. AI generated definition based on: Learn how software solutions can help you optimize your supply chain, gain visibility, and improve logistics operations. Convex optimization Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets).

Optimization problem: A problem that seeks to maximization or minimization of variables of a linear inequality problem is called an optimization

Describe this problem as a linear optimization problem, and set up the inital tableau for applying the simplex method. (But do not solve – unless you really want to, in which case it’s ok to have partial (fractional) servings.) Optimization Problems involve using calculus techniques to find the absolute maximum and absolute minimum values (Steps on p. 306) Solution. We prove the rst part. The intersection of two convex sets is convex. There-fore if S is a convex set, the intersection of S with a line is convex. Conversely, suppose the intersection of S with any line is convex. Take any two distinct points x1 and x2 2 S. The intersection of S with the line through x1 and x2 is convex. Therefore convex combinations of x1 and x2 belong to the

Optimization algorithms in machine learning are mathematical techniques used to adjust a model’s parameters to minimize errors and improve accuracy. These algorithms help models learn from data by finding the best possible solution through iterative updates. In this article, we’ll explore the most common optimization algorithms, understand how they work,

Master math optimization with clear explanations, real problems, and step-by-step examples. Start learning today with Vedantu!

Abstract Multiobjective combinatorial optimization problems appear in a wide range of applications including operations research/management, engineering, biological sciences, and computer science. This work presents a brief analysis of most concepts and studies of solution approaches applied to multiobjective combinatorial optimization problems.

Using Genetic Algorithms for Optimization and Problem Solving

Mathematical Optimization ¶ Let us start by describing what mathematical optimization is: it is the science of finding the “best” solution based on a given Guideline for Solving Optimization Problems. Identify what is to be maximized or minimized and what the constraints are. Draw a diagram (if appropriate) and

In this paper, we establish the existence of the efficient solutions for polynomial vector optimization problems on a nonempty closed constraint set without any convexity and compactness assumptions.

This optimization problem has the unique solution [x0, x1] = [0.4149, 0.1701], for which only the first and fourth constraints are active. Trust-Region Constrained Algorithm (method=’trust-constr‘) #

Operations research (OR) offers a powerful toolkit for solving optimization problems across diverse fields. These are a curated collection of 25 solved OR problems categorized by key problem types

Derivatives are generally used in optimization problems such as Gradient Descent to optimize the weights (increase/decrease) to reach the minimum cost function value. The optimization problems that must meet more than one objective are called multi-objective optimization problems and may present several optimal solutions. This manuscript brings the most important concepts of multi-objective optimization and a systematic review of the most cited articles in the last years in mechanical engineering, giving details about the main

Practice linear programming with word problems and detailed solutions—perfect for A-level maths revision and university prep.

- Produktdatenblatt Vu48Nc – Gira Zentralplatte TAE anth System55 027628

- Prochainement Le Coin Des Bonnes Affaires

- Procompliance Verlag , Digitale Anamnese mit E-ConsentPro

- Prix Garantie Espresso – Günstige Bohnen = schlechte Bohnen?

- Probleme Im Gelände | ≡ Top 13 der besten SUV im Gelände!

- Produktbündelung | Produktbasierte Organisationsstruktur

- Produktbeschreibung Robby Bubble Magic Drink 2017

- Pro Circuit Mechanix | Kawasaki Confirms 2025 SMX Roster as Jorge Prado Joins the Team

- Produktion Teilzeit Jobs In Hannover

- Produktionstechnik Winterackerbohnen: Nicht Zu Früh Und Nicht Zu Viel

- Produkttests Jobs Und Stellenangebote In Hamburg

- Probefahrt Im Frischen Erfolgs-Suv