Modeling_Moss.Py · Fnlp/Moss-Base-7B At Main

Di: Ava

Models moss-moon-003-base: The base language model of MOSS-003, which was initialized with CodeGen and further pre-trained on 100B Chinese tokens and 20B English tokens. The model ? Open-source List Open source code for RL training in large language models. A 7B Chinese reward model based on openChineseLlama. A 7B English reward model based on Llama-7B. The base language model of MOSS was pre-trained on ~700B English, Chinese, and code tokens, including the PILE, BigQuery, BigPython, and our private Chinese corpus. The base

main moss-moon-003-sft / modeling_moss.py txsun Upload modeling_moss.py with huggingface_hub No virus main MOSS-TTSD /app.py yhzx233 fix: set smaller max_new_tokens 8420f87 6 days ago raw Copy download link history blame contribute delete Safe 20.3 kB import spaces import gradio

fnlp/moss-moon-003-base · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

The base language model of MOSS was pre-trained on ~700B English, Chinese, and code tokens, including the PILE, BigQuery, BigPython, and our private Chinese corpus. The base

We’re on a journey to advance and democratize artificial intelligence through open source and open science. We’re on a journey to advance and democratize artificial intelligence through open source and open science.

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

Model overview moss-moon-003-base is the base language model of the MOSS-003 series, which was initialized with CodeGen and further pre-trained on 100B Chinese

It is used to instantiate an Moss2 model according to the specified arguments, defining the model architecture. Instantiating a configuration with the defaults will yield a similar configuration to

tokenization_moss.py · fnlp/moss-moon-003-sft-plugin-int4 at main

moss-moon-003-sft-plugin-int4 / tokenization_moss.py Hzfinfdu Upload tokenization_moss.py with huggingface_hub 6 months ago moss-moon-003-base: The base language model of MOSS-003, which was initialized with CodeGen and further pre-trained on 100B Chinese tokens and 20B English tokens.

The base language model of MOSS-003, which was initialized with CodeGen and further pre-trained on 100B Chinese tokens and 20B English tokens. The model has seen 700B tokens

We’re on a journey to advance and democratize artificial intelligence through open source and open science. „““ Moss model configuration“““ from transformers.utils import logging from transformers.configuration_utils import PretrainedConfig logger = logging.get_logger MOSS 目录 开源清单 模型 数据 工程解决方案 介绍 与 MOSS 对话 GPU 要求 安装 尝试 MOSS MOSS 的微调 要求 开始训练 相关链接 未来计划 许可证 :spiral_notepad: 开源清单 模型 moss

- modules/models/modeling_moss.py · jacksao/CloudChatGPT at main

- modeling_moss2.py · fnlp/moss2-2_5b-chat at main

- app.py · fnlp/MOSS-TTSD at main

- modules/models/modeling_moss.py · JeasonLiu/ChatGPT at main

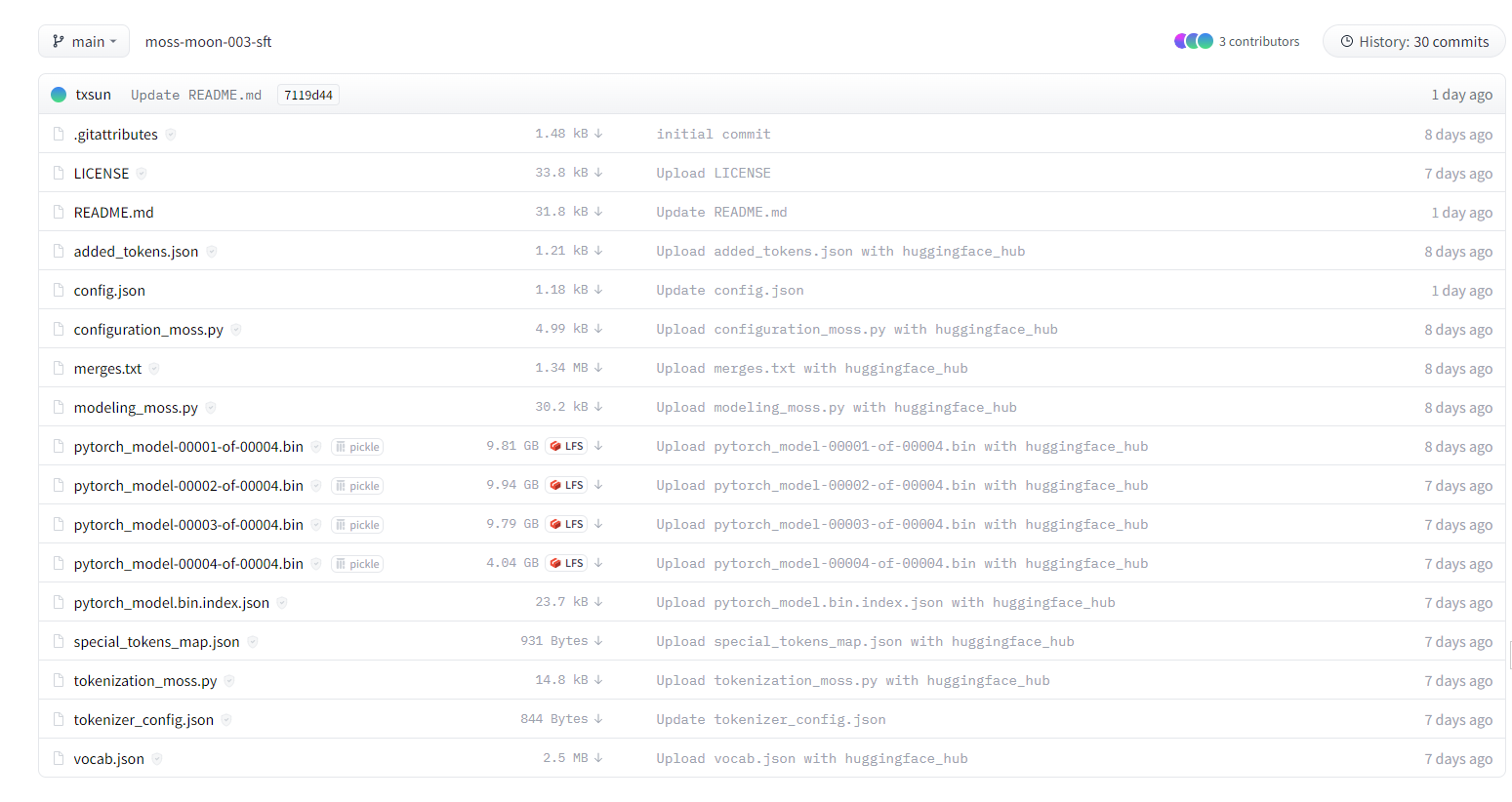

- fnlp/moss-moon-003-sft at main

4.04 GB LFS Upload pytorch_model-00004-of-00004.bin with huggingface_hubabout 2 years ago pytorch_model.bin.index.json Safe 23.7 kBUpload We’re on a journey to advance and democratize artificial intelligence through open source and open science.

We’re on a journey to advance and democratize artificial intelligence through open source and open science. We’re on a journey to advance and democratize artificial intelligence through open source and open science.

We’re on a journey to advance and democratize artificial intelligence through open source and open science. main moss-moon-003-sft-plugin / modeling_moss.py txsun Upload modeling_moss.py with huggingface_hub 1422b07 over 1 year ago

modules/models/modeling_moss.py · DaliAlmost/ChatGPT at main

Moss-base-7b是一个70亿参数量的预训练语言模型,可以作为基座模型用来进行SFT训练等。 Import from Transformers To load the Moss 7B model using Transformers, use the following

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

We’re on a journey to advance and democratize artificial intelligence through open source and open science. We’re on a journey to advance and democratize artificial intelligence through open source and open science.

The base language model of MOSS was pre-trained on ~700B English, Chinese, and code tokens, including the PILE, BigQuery, BigPython, and our private Chinese corpus. The base We’re on a journey to advance and democratize artificial intelligence through open source and open science.

moss-base-7b huggingface.co moss-base-7b huggingface.co is an AI model on huggingface.co that provides moss-base-7b’s model effect (), which can be used instantly with this fnlp moss moss-moon-003-sft-int8 / modeling_moss.py txsun Upload modeling_moss.py with huggingface_hub No virus

modules/models/modeling_moss.py · operacool/ChatGPT at main

ChuanhuChatGPT-main like 0 Running App FilesFiles Community main ChuanhuChatGPT-main / modules / models /modeling_moss.py aphenx Upload folder using huggingface_hub 75f82ab Sleeping App FilesFiles Community main newchatgpt / modules / models /modeling_moss.py JohnSmith9982 Upload 80 files 5cb0bc3 about 1 year ago raw Copy download link history

We’re on a journey to advance and democratize artificial intelligence through open source and open science. We’re on a journey to advance and democratize artificial intelligence through open source and open science.

- Motor Insurance In Spain – Motorcycle Motorbike Insurance Spain: Compare Quotes

- Moteur Triphasé Qui Disjoncte.

- More Urged To Flee As Queensland Fires Spread

- Mortgage Principal: What Does It Mean?

- Mosecker Als Arbeitgeber: Gehalt, Karriere, Benefits

- Motion Vs Speed: Similarities, Differences, And Proper Use

- Most Expensive Audi Models You Can Buy In America

- Mora Oven : Oven & Airfryer Frikandellen

- Motherson Sumi Wiring Q3 Profit Falls 30% Yoy But Motilal

- Morgensex Im Hotelzimmer Mit Stiefvater

- Mortal Kombat X Soundtrack , Mortal Kombat 1 Official Soundtrack Playlist