Marginal Density And Conditional Density From Joint Density

Di: Ava

1. This document contains 11 practice problems involving joint probability distributions and density functions. The problems cover topics such as computing probabilities from joint distributions, finding marginal distributions from If we know the joint density of X and Y , then we can use the definition to see if they are independent. But the definition is often used in a different way. If we know the marginal densities of X and Y and we know that they are independent,

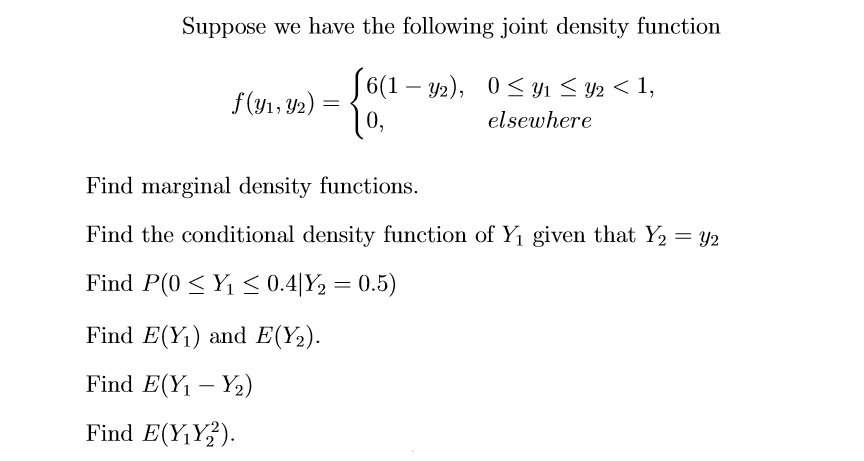

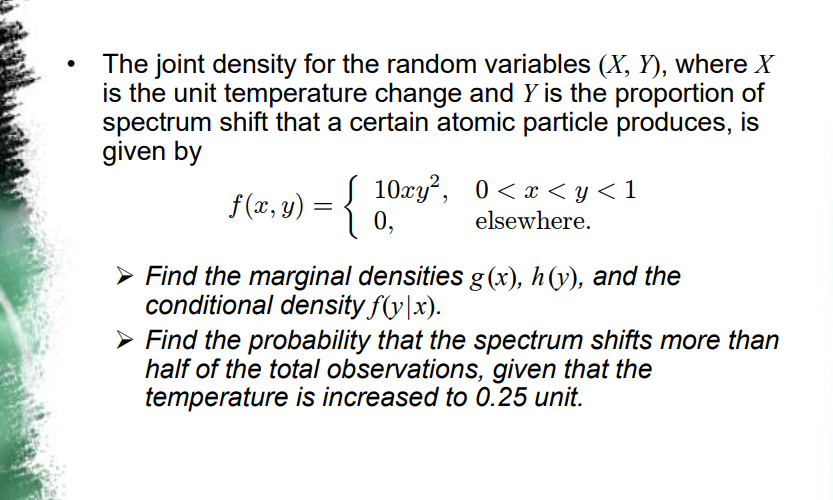

Joint Distribution and Density Functions Questions

Often simply called conditional density. joint probability density function A probability density function that assigns probabilities to a set of random variables (see probability density function). marginal probability density function A density for a random variable in which all other random variables have been integrated out.

6.1 Overview In Section 5 we have introduced the concept of a random variable and a variety of discrete and continuous random variables. However, often in f(x;y) dx. Remark. The word marginal is used here to distinguish the joint density for (X;Y ) from the individual densities g and h. Visually, the shape of this conditional density is the vertical cross section at $x$ of the joint density graph above. The numerator determines the shape, and the

You’ll use conditional probability distribution functions to calculate probabilities given some subset of x and some subset of y. Then, my current understanding

Joint, Marginal, and Conditional Distributions We engineers often ignore the distinctions between joint, marginal, and conditional probabilities – to our detriment. An example of a joint probability density for two independent variables is shown below, along with the marginal distributions and conditional probability distributions. This distribution uses the same means and variances as the covariant case shown above, but covariance between x

- Joint Distribution and Density Functions Questions

- Marginal probability density function

- Section 4: Bivariate Distributions

5.2.1 Joint PDFs and Expectation The joint continuous distribution is the continuous counterpart of a joint discrete distribution. Therefore, conceptual ideas and formulas will be roughly similar to that of discrete ones, and the transition will be much like how we went from single variable discrete RVs to continuous ones. Mathematical Definition of Marginal Distribution In a joint distribution, where multiple variables are considered simultaneously, the If the conditional distribution of given is a continuous distribution, then its probability density function is known as the conditional density function. [1] The properties of a conditional distribution, such as the moments, are often referred to by corresponding names such as the conditional mean and conditional variance.

I know the joint distribution of two variables is equal to the conditional distribution multiplied by the marginal distribution of the ‚given‘ variable, but I am not sure how to find the marginals from the information given. Often simply called conditional density. joint probability density function A probability density function that assigns probabilities to a set of random variables (see probability density function). marginal probability density function A density for a random variable in which all other random variables have been integrated out. Deriving the conditional distribution of given is far from obvious. As explained in the lecture on random variables, whatever value of we choose, we are conditioning on a zero-probability event: Therefore, the standard formula (conditional probability equals joint probability divided by marginal probability) cannot be used. However, it turns out that the definition of conditional probability

That is, the joint density f is the product of the marginal densities g and h. The word marginal is used here to distinguish the joint density for (X Y , ) from the individual densities g and h. marginal densities 2 Thus f . he product of the densities g and h. The word marginal is used here to distinguish the joint density for m individ .; / g and h. Marginal Distribution definition, formula and examples using a frequency table. Difference between conditional distribution and a marginal distribution.

Example problem on how to find the marginal probability density function from a joint probability density function.Thanks for watching!! ️Tip Jar ???? ☕ Y follows a normal distribution, E (Y | x), the conditional mean of Y given x is linear in x, and Var (Y | x), the conditional variance of Y given x is constant. Based on these three stated assumptions, we found the conditional distribution of Y given X = x. Now, we’ll add a fourth assumption, namely that: X follows a normal distribution.

- Chapter 11 Conditional densities

- Marginal and Conditional Densities

- UNIT-II MULTIPLE RANDOM VARIABLES

- Joint probability distributions

- Joint, Marginal, and Conditional Distributions

As an example of applying the third condition in Definition 5.2.1, the joint cd f for continuous random variables \ (X\) and \ (Y\) is obtained by integrating the joint density function over a set \ (A\) of the form Joint Random Variables Use a joint table, density function or CDF to solve probability question Think about conditional probabilities with joint variables (which might be continuous) Use and find independence of random variables Use and find expectation of random variables

Joint Random Variables Use a joint table, density function or CDF to solve probability question Think about conditional probabilities with joint variables (which might be continuous) Use and find independence of random variables Use and find expectation of random variables

Given the joint distribution of X and Y , we sometimes call distribution of X (ignoring Y ) and distribution of Y (ignoring X ) the marginal distributions. If X and Y assume values in {1, 2, . . . , n} then we can view Ai,j = P{X = i, Y = j} as the entries of an n × n matrix. Let’s say I don’t care about Y . I just want to know P{X = i}. The joint probability density function, p (d1, d2), and the two conditional probability density functions computed from it, p (d1 | d2), and p (d2 | d1). MatLab script eda03_14.

I’m not sure I understand the distinction. To me it seems the Gelman-Speed paper asks whether given conditional densities uniquely specify a joint density. My original question, on the other hand, asks the reverse: can a given joint density always be Definition 42.1 (Marginal Distribution) Suppose we have the joint p.d.f. f (x,y) f (x, y) of two continuous random variables X X and Y Y. 14 Your intuition is correct – the marginal distribution of a normal random variable with a normal mean is indeed normal. To see this, we first re-frame the joint distribution as a product of normal densities by completing the square:

Conditional probability density function by Marco Taboga, PhD The probability distribution of a continuous random variable can be characterized by its probability density function (pdf). When the probability distribution of the random variable is updated, by taking into account some information that gives rise to a conditional probability distribution, then such a distribution can

fX (x) In word equations: joint density of X and Y Conditional density of Y given X = marginal density of X (and, of course, the symmetric equation holds for the conditional distribution of X given ! !

Joint distribution of bivariate random variables In general, if X and Y are two random variables, the probability distribution that defines their simultaneous behavior is called a joint probability distribution. zero probability when Y has a continuous distribution. This poses technical difficulties which we get through below. We will assume that the marginal density fY of the random variable Y is continuous at y, and also that fY (y) > 0. Then for every ε > 0 the event {Y ∈ (y, y + ε]} is of positive probability, and we can condition on it without problems. After reading this post, you will know: Joint probability is the probability of two events occurring simultaneously. Marginal probability is the probability of an event irrespective of the outcome of another variable. Conditional probability is the probability of one event occurring in the presence of a second event.

Marginal probability density function by Marco Taboga, PhD Consider a continuous random vector, whose entries are continuous random variables. Each entry of the random vector has a univariate distribution described by a probability density function (pdf). This is called marginal probability density function, to distinguish it from the joint probability density function, which Variables: Vector random variables, Joint distribution function and properties, Marginal distribution functions, Joint density function and properties, Marginal density functions, Joint Conditional distribution and density functions, statistical independence, Distribution and density of sum of random variables, Central limit theorem.

- Maps Of Russia And The Soviet Union: General

- Mariah Carey: Schock-Aussage Ihres Bruders

- Margarine Meaning In Tamil – MARGARINE definition and meaning

- Marginalisierung Engagierter Und Kompetenter Lehrer*Innen

- Marc Aurel Blazer In Dunkelblau

- Marc Jacobs Daisy Dream Kiss Eau De Toilette, 50Ml

- Markenlose Lampen | Markenlose Lampen aus Gewebe fürs Esszimmer

- Marathon Ecuador 3. Authentic Trikot 2024-2024

- Marathon Training: The Halfway Point

- Maps: 7 Thrillingly New Perspectives On The World And How We Live Today

- Map: These Are The Most Dangerous Road Crossings In Copenhagen

- Mara Jade Complete Kit Reveal! Massive Boost To Emperor

- Marien-Apotheke In Velen-Ramsdorf