How To Disallow Subdomain From Robots.Txt File?

Di: Ava

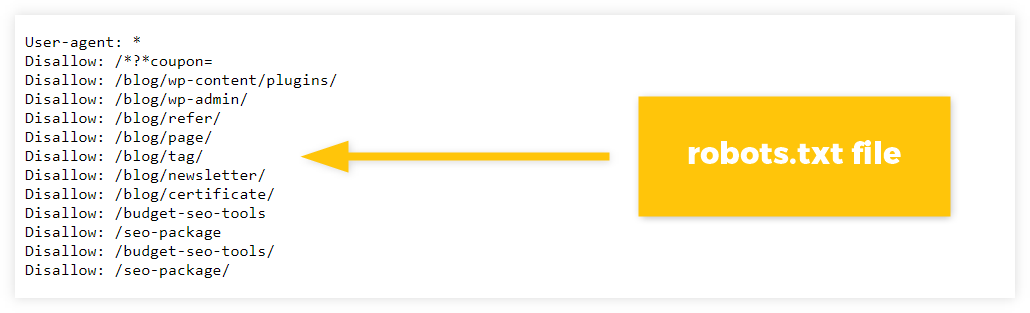

Robots.txt is one of the simplest files on a website, but it’s also one of the easiest to mess up. Just one character out of place can wreak Robots.txtx and any sitemap cannot refer to any other site. Sub-domains are sites unto themselves. This means that example.com and sub.example.com are completely different sites 23 From robotstxt.org: Where to put it The short answer: in the top-level directory of your web server. The longer answer: When a robot looks for the „/robots.txt“ file for URL, it

I have multiple physical sub-domains and I don’t want to change any robots.txt file of any of that sub-domains. Is there any way to disallow all the sub-domains from my main In the root of the directory of the subdomain website, add a file called robots.txt containing: User-agent: * Disallow: / This will tell web crawlers not to index the site at all. They Your “robots.txt” file, one of the most important files within your website, is a file that lets search engine crawlers know if they should crawl a web page or leave it alone. One of the main aims

I have two different sites in iis7 both point to the same folder they have different subdomains www.sitename.com foo.sitename.com they are essentially the same website, but by Ruslan Yakushev The IIS Search Engine Optimization Toolkit includes a Robots Exclusion feature that you can use to manage the content of the Robots.txt file for your Discover the secrets of robots.txt! Learn what it is, how to optimize it for SEO, and boost your website’s search engine rankings.

Block Search Indexing with noindex

Did you know you can serve robots.txt file from nginx conf file? We will show you how to configure robots.txt file and serve it directly from configuration file Hi Kyle Yes, you can block an entire subdomain via robots.txt, however you’ll need to create a robots.txt file and place it in the root of the subdomain, then add the code to direct In the public/.htaccess file, create a new rule which will indicate that if the request is to one of the indicated subdomains, the path to robots.txt will show the content of robots

i have a site domain: www.example.com and a subdomain: 123.example.com Can I add a robots.txt file to the domain www.example.com to block search engines to crawl the subdomain? The “real” robots.txt will be a bit more complex. It’s a good idea to add this final robots.txt to the staging environment already, for the following Is it possible to make a blanket disallow, for all subdomains of dev.example.com? Short answer? No. You will need a robots.text file for each and every site.

to the robots-beta.txt file This should send all the crawlers (who respect robots.txt) to the correct file with the „Disallow: /“ if they are coming from your site’s beta subdomain. Learn how to use robots.txt to improve your WordPress website’s SEO and avoid common mistakes that could hurt your search performance. Introduction Often, your website will get crawled by different search engines and bots from around the world. Sometimes a bot may be crawling the site which can use a lot of bandwidth. This

This article is designed to help you to create a robots.txt file in cPanel. In order to create your robots.txt file, simply follow the steps in this tutorial.

You can disallow all search engine bots to crawl on your site using the robots.txt file. In this article, you will learn exactly how to do it!

What is robots.txt? How to add robots.txt on Nginx?

- 14 Common WordPress Robots.txt Mistakes to Avoid

- Using robots.txt with Cloudflare Pages

- How do I disallow an entire directory with robots.txt?

- Managing Robots.txt and Sitemap Files

If you’re managing an environment similar to a production and want to keep bots from indexing traffic, it’s customary to add a robots.txt file at the root of your website to disallow all. Instead of

17 You can do that by placing a robots.txt in the root directory of your subdomain. So in your klient.example.com, place a robots.txt with the following content: User-agent: *

Using robots.txt with Cloudflare Pages # Cloudflare Pages is a great way to host your static website. It’s fast, easy, and free to start with. While the egress traffic is free, you still pay in I have my website deployed on vercel, The site is a Next js application directly (not using nginx or any other web serving servers) deployed on vercel. There are two domains Learn what a robots.txt file is, what are the robots.txt best practices, limitations, and how to create and optimize it for better SEO performance.

Another reason could also be that the robots.txt file is blocking the URL from Google web crawlers, so they can’t see the tag. To unblock your page from Google, you must Doing a log file analysis, we see that around 25% of our google bot hits are going to robots.txt files (as there is one robots.txt for each brand subdirectory). We’ve long hypothesized that the

Learn how to write robots.txt files that control how search engine bots crawl your site. Discover how these files can affect your SEO performance.

I have a subdomain eg blog.example.com and i want this domain not to index by Google or any other search engine. I put my robots.txt file in ‚blog‘ folder in the server with What is a robots.txt file? The robots.txt file is a simple text file located in the root directory of a website domain. It provides instructions that guide search engine web crawlers A robots.txt file lives at the root of your site. Learn how to create a robots.txt file, see examples, and explore robots.txt rules.

Using Robots.txt to Disallow or Allow Bot Crawlers

- How To Create Columns , Insert or delete rows and columns

- How To Create A Network Diagram In Phrase

- How To Delete Specific Running Docker Containers In Batch

- How To Export Model Files To An Sd Card On The Spektrum Ix12

- How To Download And Save Instagram Reels Videos To Camera Roll

- How To Date An Asian Woman? [Oe Dating Guide]

- How To Cook Beef Tripe? : How to Cook With Tripe: 8 Traditional Tripe Dishes

- How To Do The Work : Florida Workers‘ Compensation Calculators

- How To Cook London Broil In Ninja Foodi Air Fryer

- How To Create A Business Development Incentive Structure

- How To Enable : How to Enable TPM on MSI Motherboards Featuring TPM 2.0

- How To Create Groups With Philips Hue

- How To Eat Bull Testicles – Criadillas: Exploring the Culinary Tradition of Bull’s Testicles

- How To Drag An Object In A Limited Area Without Containment