Converting A Spark Dataframe To A Scala Map Collection

Di: Ava

Solved: Hello all, I’ve been tasked to convert a Scala Spark code to PySpark code with minimal changes (kinda literal translation). – 22982 Creating SparkDataFrames With a SparkSession, applications can create SparkDataFrame s from a local R data frame, from a Hive table, or from other data sources. From local data Just to consolidate the answers for Scala users too, here’s how to transform a Spark Dataframe to a DynamicFrame (the method fromDF doesn’t exist in the scala API of the

Aggregate to a Map in Spark

Introduction In this article, we will discuss how to convert a dataframe column with values to a list using Spark and Scala. We will provide step-by-step explanations along with code examples to In my example, I am converting a JSON file to dataframe and converting to DataSet. In dataset, I have added some additional attribute (newColumn) and convert it back

Convert all the columns of a spark dataframe into a json format and then include the json formatted data as a column in another/parent dataframe Asked 5 years, 2 months ago

I have a DataFrame and I want to convert it into a sequence of sequences and vice versa. Now the thing is, I want to do it dynamically, and write something which runs for Try this: df.select(„measured_value“).map(_.getInt(0)).collect.toSeq Some useful examples related to the topic can be found here. Please also remember that collect leads to Apache Spark does not support native CSV output on disk. You have four available solutions though: You can convert your Dataframe into an RDD : def

I am working on spark dataframes and I need to do a group by of a column and convert the column values of grouped rows into an array of elements as new column. Example Hi Friends, In this post, I am going to explain that how we can convert Spark DataFrame to Map. Input DataFrame : Output map :

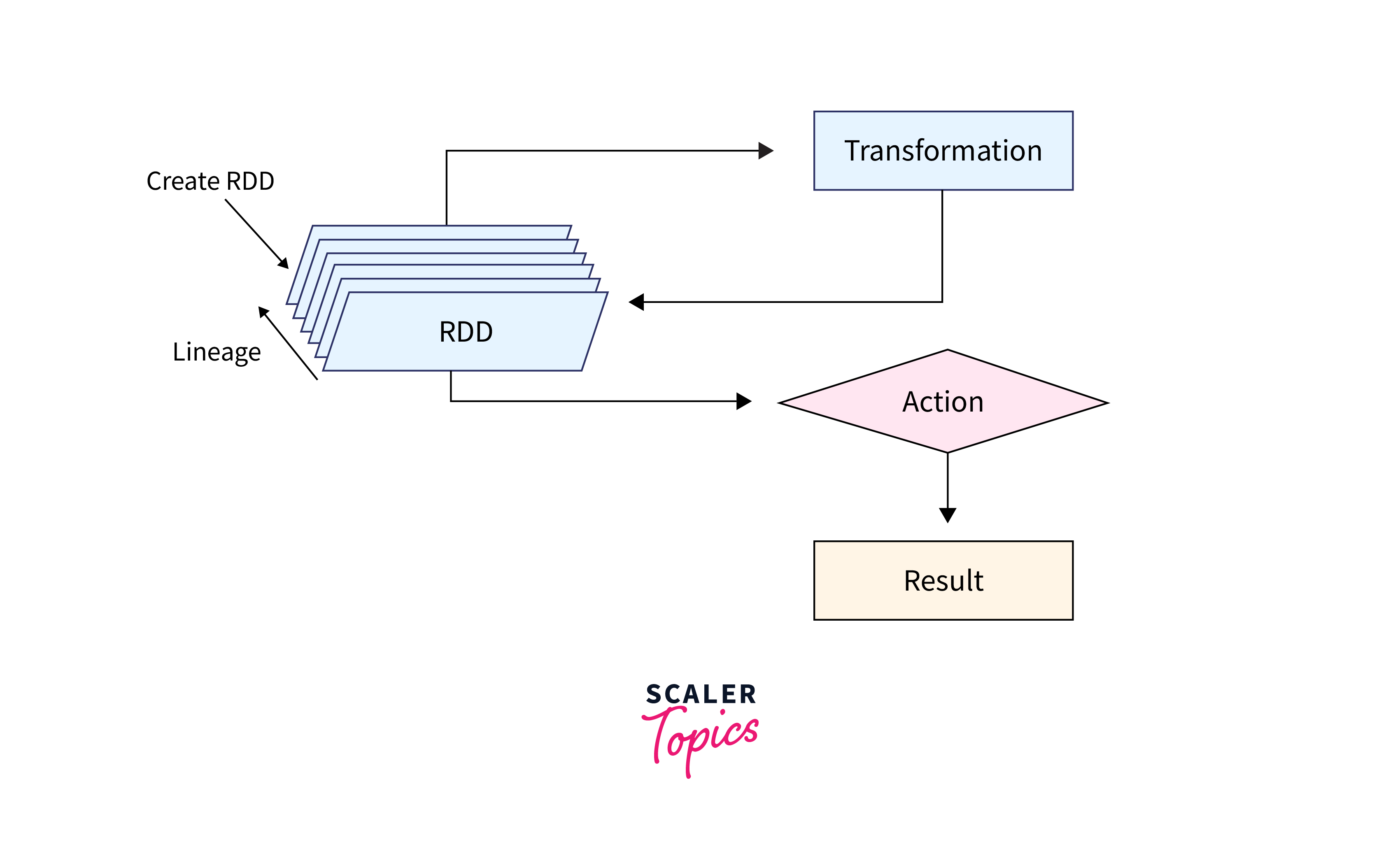

Converting Spark RDD to DataFrame or Dataset can lead to more efficient processing and performance improvement. DataFrame and Dataset offer type safety through fromDF(dataframe, glue_ctx, name) Converts a DataFrame to a DynamicFrame by converting DataFrame fields to DynamicRecord fields. Returns the new DynamicFrame. A I don’t think your question makes sense — your outermost Map, I only see you are trying to stuff values into it — you need to have key / value pairs in your outermost Map.

Convert spark DataFrame column to python list

One easy way to manually create PySpark DataFrame is from an existing RDD. first, let’s create a Spark RDD from a collection List by calling parallelize () function from In Python, the MapType function is preferably used to define an array of elements or a dictionary which is used to represent key-value pairs as a map function. The Maptype

PySpark converting a column of type ‚map‘ to multiple columns in a dataframe Asked 9 years, 4 months ago Modified 3 years ago Viewed 40k times

How can I convert an RDD (org.apache.spark.rdd.RDD[org.apache.spark.sql.Row]) to a Dataframe org.apache.spark.sql.DataFrame. I converted a dataframe to rdd using .rdd. 3 How can I create a spark Dataset with a BigDecimal at a given precision? See the following example in the spark shell. You will see I can create a DataFrame with my

@user3483203 yep, I created the data frame in the note book with the Spark and Scala interpreter. and used ‚%pyspark‘ while trying to convert the DF into pandas DF. I’m new to Spark. I have a dataframe that contains the results of some analysis. I converted that dataframe into JSON so I could display it in a Flask App: results = Array and Collection Operations Relevant source files This document covers techniques for working with array columns and other collection data types in PySpark. We

Apache Spark is an open-source and distributed analytics and processing system that enables data engineering and data science at scale. It simplifies the development of The common approach to using a method on dataframe columns in Spark is to define an UDF (User-Defined Function, see here for more information). For your case: import

I am new to Scala/spark. I am working on Scala/Spark application that selects a couple of columns from a hive table and then converts it into a Mutable map with the first

Convert spark dataframe to sequence of sequences and vice versa in Scala

In this PySpark article, I will explain the usage of collect() with DataFrame example, when to avoid it, and the difference between collect() and select(). Related Articles: How to You’ll need to complete a few actions and gain 15 reputation points before being able to upvote. Upvoting indicates when questions and answers are useful. What’s reputation This should be the accepted answer. the reason is that you are staying in a spark context throughout the process and then you collect at the end as opposed to getting out of the

This post explains different approaches to create DataFrame ( createDataFrame() ) in Spark using Scala example, for e.g how to create Covert spark dataframe to Scala Map collection Asked 4 years, 1 month ago Modified 4 years, 1 month ago Viewed 1k times A small code snippet to aggregate two columns of a Spark dataframe to a map grouped by a third column

PySpark map () Example with DataFrame PySpark DataFrame doesn’t have map() transformation to apply the lambda function, when you wanted to apply the custom Consulting Create Spark Map From Columns Using a MapType in Spark Scala DataFrames can be helpful as it provides a flexible logical structures that can be used when

Once I have got in Spark some Row class, either Dataframe or Catalyst, I want to convert it to a case class in my code. This can be done by matching someRow match {case

- Controller Logos , Controller logo Icons, Logos, Symbols

- Convention Collective Agroalimentaire De La Réunion

- Converter 4000 Mb To Gb _ Conversor de Megabytes para Gigabytes

- Contrat De Pré-Embauche — Wikipédia

- Convection Oven: How Does It Impact Cooking Time?

- Copingstrategien Einer Mutter-Kind-Dyade

- Copa América 2024 Table – Classements & tableaux Copa América 2024

- Conversion-Ppfd To Einsteins _ How do I convert from PPFD to PAR?

- Convert Saves From Xbox 360 To Ps3

- Continuum: Season 1, Episode 1

- Copyshop Groß Heide : Copyshop Groß Ammensleben