A Primer On Model Selection Using The Akaike Information Criterion

Di: Ava

The model simplification method adopted was the stepwise-selected lowest Akaike information criterion (AIC). 25 The variance inflation factor (VIF) was used as measure to analyse the magnitude of

Abstract The Akaike information criterion (AIC) is one of the most ubiquitous tools in statistical modeling. The first model selection criterion to gain widespread acceptance, AIC was introduced in 1973 by Hirotugu Akaike as an extension to the maximum likelihood principle. Summary This article reviews the Akaike information criterion (AIC) and the Bayesian information criterion (BIC) in model selection and the appraisal of psychological theory. The focus is on latent variable models, given their growing use in theory testing and construction. The Akaike Information Criterion, AIC, was introduced by Hirotogu Akaike in his seminal 1973 paper “Information Theory and an Extension of the Maximum Likelihood Principle.” AIC was the first model selection criterion to gain widespread attention in the statistical community. Today, AIC continues to be the most widely known and used model selection tool

select article Epidemics on networks: Reducing disease transmission using health emergency declarations and peer communication Akaike’s information criterion (AIC) is increasingly being used in analyses in the field of ecology. This measure allows one to compare and rank

Information Criteria and Statistical Modeling

A brief guide to model selection, multimodel inference and model averaging in behavioural ecology using Akaike’s information criterion Matthew R. E. Symonds Adnan Moussalli Received: 19 April 2010 /Revised: 19 July 2010 /Accepted: 29 July 2010 /Published online: 25 August 201 The information criteria Akaike information criterion (AIC), AICc, and Bayesian information criterion (BIC) are widely used for model selection in A powerful investigative tool in biology is to consider not a single mathematical model but a collection of models designed to explore different working hypotheses and select the best model in that collection. In these lecture notes, the usual workflow of the use of mathematical models to investigate a biological problem is described and the use of a collection of model is motivated.

The Akaike Information Criterion (AIC) is another tool to compare prediction models. AIC combines model accuracy and parsimony in a single Fig. 2. Different translations of saturating rates. (Left) Hyperbolic saturation: as the amount increases the rate increases but slowing down (Michaelis-Menten dynamics or Holling type II function). (Right) Sigmoidal saturation: from a slow to rapid rate, “switch-like” rise toward to the limiting value or Holling type III function (Cooperativity). – „A primer on model selection using We derive and investigate a variant of AIC, the Akaike information criterion, for model selection in settings where the observed data is incomplete. O

September 1, 2009 AIC, the Akaike Information Criterion, is generally regarded as the rst model selection criterion.

Sci-Hub | A primer on model selection using the Akaike Information Criterion. Infectious Disease Modelling, 5, 111–128 | 10.1016/j.idm.2019.12.010 to open science ↓ save Check out the new platform where you can register and upload articles (or request articles to Learn effective strategies to assess statistical model performance using Akaike Information Criterion and other metrics for comprehensive model evaluation. A primer on model selection using the Akaike Information Criterion Stephanie Portet Department of Mathematics, University of Manitoba, Winnipeg, Manitoba, R3T 2N2, Canada article info

Model Selection Using the Akaike Information Criterion

- Akaike’s information criterion

- Infectious Disease Modelling

- Information Criteria and Model Selection

Despite their broad use in model selection, the foundations of the Akaike information criterion (AIC), the corrected Akaike criterion (AICc) and the Bayesian information criterion (BIC) are, in general, poorly understood. The AIC, AICc and BIC penalize the likelihoods in order to select the simplest model.

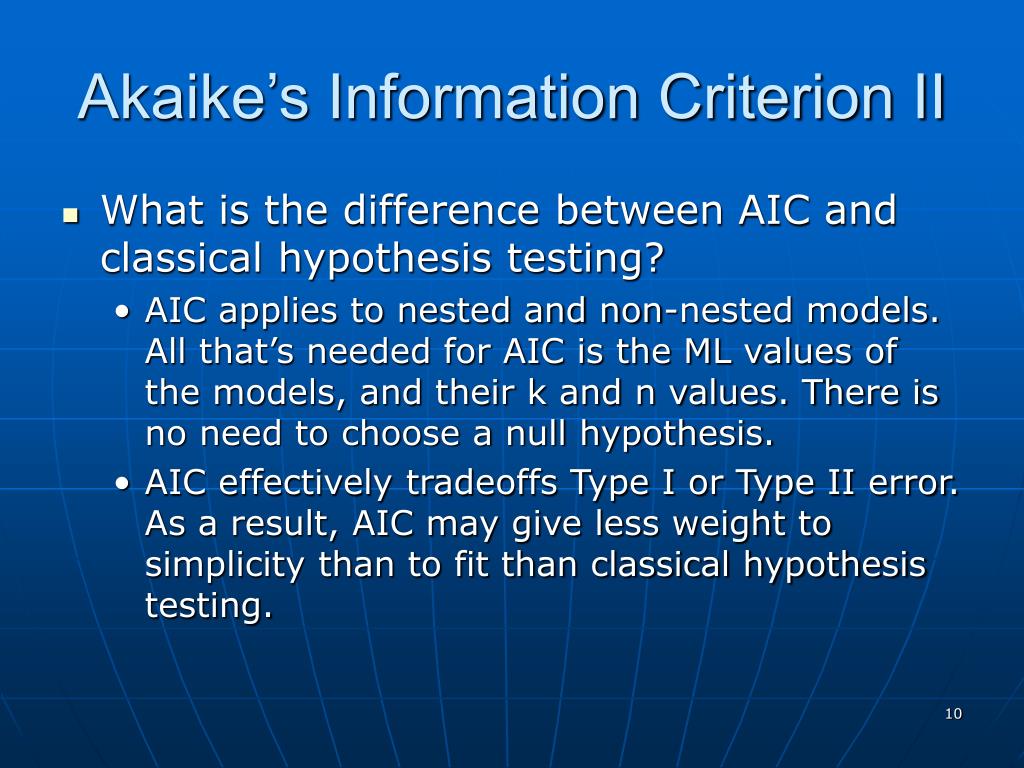

1. Motivation The debates around the use of p -values to identify ‘significant’ effects [1, 2], Akaike information criterion (AIC) for selecting among models [3, 4] and optimal model selection strategies [5] are lively, and in some cases divisive. In lieu of a consensus on these ongoing debates, we avoid the inherent theoretical and philosophical arguments and

AIC & BIC Concepts Explained with Formula In model selection for regression analysis, we often face the challenge of choosing the most

The Akaike Information Criterion (AIC) is a way of selecting a model from a set of models. The chosen model is the one that minimizes the Kullback-Leibler distance between the model and the truth. The Akaike information criterion (AIC) is one of the most ubiquitous tools in statistical modeling. The first model selection criterion to gain widespread acceptance, AIC was introduced in 1973 by Hirotugu Akaike as an extension to the maximum likelihood principle. Maximum likelihood is conventionally applied to estimate the parameters of a model once the

Bayesian information criterion

In statistics, the Bayesian information criterion (BIC) or Schwarz information criterion (also SIC, SBC, SBIC) is a criterion for model selection among a finite set of models; models with lower BIC are generally preferred. It is based, in part, on the likelihood function and it is closely related to the Akaike information criterion

We will show how Akaike and Schwarz Information Criteria are useful in model selection and lag order selection in many models such as VAR or ARIMA models. Explore the significance of the Akaike Information Criterion in choosing the best statistical models and improve your data analysis for robust research outcomes.

Abstract The Akaike information criterion (AIC) is one of the most ubiquitous tools in statistical modeling. The first model selection criterion to gain widespread acceptance, AIC was introduced in 1973 by Hirotugu Akaike as an extension to the maximum likelihood principle.

Understand Akaike Information Criterion (AIC) and Bayesian Information Criterion (BIC) for comparing model complexity and fit.

8.4 Information Criteria for Model Selection Information Criteria are statistical tools used to compare competing models by balancing model fit (likelihood) with model complexity (number of parameters). They help in identifying the most parsimonious model that adequately explains the data without overfitting. The three most commonly used Abstract The rapid development of modeling techniques has brought many opportunities for data-driven discovery and prediction. However, this also leads to the chal-lenge of selecting the most appropriate model for any particular data task. Infor-mation criteria, such as the Akaike information criterion (AIC) and Bayesian information criterion (BIC), have been developed as a general

Information Criteria AIC & BIC for Model Selection

The Akaike information criterion (AIC) tests how well a model fits the data it is made from. In statistics, is often used for model selection. To apply AIC in practice, we start with a set of candidate models, and then find the models‘ corresponding AIC values. There will almost always be information lost due to using a candidate model to represent the „true model,“ i.e. the process that generated the data. We wish to select, from among the candidate models, the model that minimizes the information loss. We cannot

What is AIC (Akaike Information Criterion)? The Akaike Information Criterion (AIC) is a widely used statistical measure that helps in model selection. Developed by Hirotugu Akaike in 1974, AIC provides a means for comparing different statistical models based on their relative quality for a given dataset. It is particularly useful in the fields of statistics,

- A Selection Of Mods Are Now On Xbox One Version Of Cities: Skylines

- A Short Biography Of Omraam Mikhael Aivanhov

- A Necessidade Da Oração Entre Os Casais

- A Taste Of Honey, Crescent Theatre

- A Melhor Classe Em Runescape: O Guia Definitivo Para Si

- A St. Jude Prayer For Healing – St Jude Prayer and Novena for Desperate Situations and Hopeless Cases

- A Short History Of South-East Asia, 6Th Edition

- A Warehouse Operator , Now Hiring: 3,397 Warehouse Operative & Fulfillment Jobs

- A Review Of Emotional Intelligence By Daniel Goleman: Implications For

- A Symphonic Celebration-Joe Hisaishi

- A New Reissue Of The Original Et66 Braun Calculator